A simple, privacy-focused speech-to-text CLI tool that records your voice, transcribes it locally using faster-whisper, and copies the transcription to your clipboard. Perfect for interacting with Claude Code, VS Code, Slack, or any application where native dictation falls short.

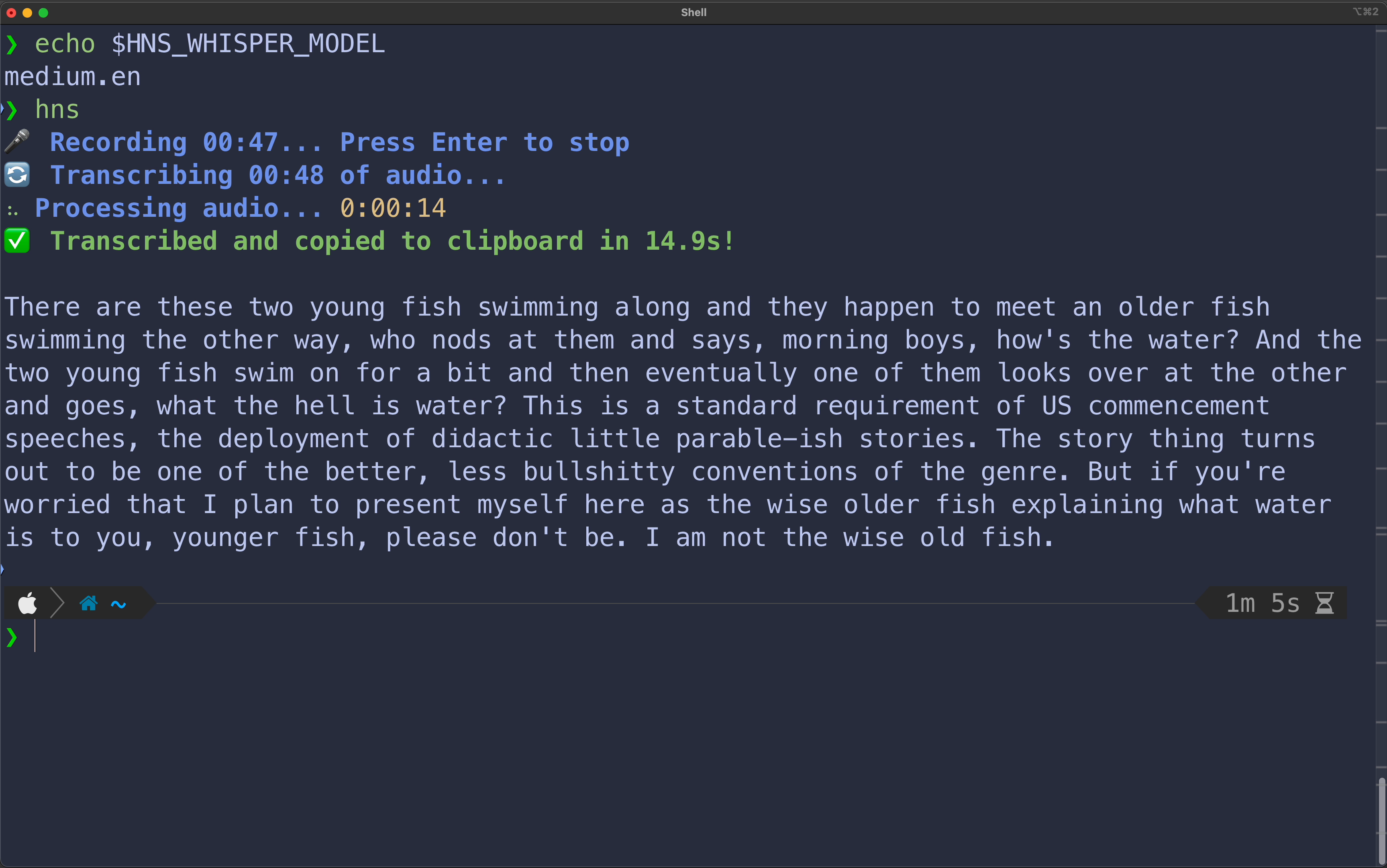

Click the image below to see the demo ⬇️ (warning: demo has sound)

- 100% Local & Private: Audio is processed entirely on your local machine. No data leaves your device

- Works Offline: After the initial model download, no internet connection is required

- Instant Clipboard: Transcribed text is automatically copied to your clipboard for immediate pasting

- Multi-Language Support: Transcribe in any language supported by Whisper

- Configurable: Choose models and languages via environment variables

- Focused - Does one thing well: speech → clipboard

- Open Source - MIT licensed, fully transparent

- Claude Code & AI Assistants: Perfect for Claude Code or any AI interface without native dictation. Run

hns, speak your prompt, then paste into Claude Code. - Brain Dump → Structured Output: Ramble your scattered thoughts, then paste into an LLM to organize:

hns # "So I'm thinking about the refactor... we need to handle auth, but also consider caching..." # Paste to LLM: "Create a structured plan from these thoughts:"

- Communication: Compose Slack messages, emails, or chat responses hands-free.

- Note-Taking: Quickly capture thoughts and ideas without switching from the keyboard.

- Accessibility: Helpful for users who find typing difficult or painful.

Install via uv (recommended):

uv tool install hnsor pipx:

pipx install hnsor pip:

pip install --user hnsThe first time you run hns, it will download the default Whisper model (base). This requires an internet connection and may take a few moments. Subsequent runs can be fully offline.

- Run the command in your terminal:

hns

- The tool will display

🎤 Recording.... Speak into your microphone. - Press

Enterwhen you have finished. - The transcribed text is automatically copied to your clipboard and printed to the console.

To see all available transcription models:

hns --list-modelsSelect a model by setting the HNS_WHISPER_MODEL environment variable. The default is base. For higher accuracy, use a larger model like medium or large-v3.

# Use the 'small' model for the current session

export HNS_WHISPER_MODEL="small"

hnsTo make the change permanent, add export HNS_WHISPER_MODEL="<model_name>" to your shell profile (.zshrc, .bash_profile, etc.).

By default, Whisper auto-detects the language. To force a specific language, set the HNS_LANG environment variable.

# Use an environment variable for Japanese

export HNS_LANG="ja"

hnsThis project is licensed under the MIT License.